April 4, 2017 From rOpenSci (https://deploy-preview-121--ropensci.netlify.app/blog/2017/04/04/gsodr/). Except where otherwise noted, content on this site is licensed under the CC-BY license.

Everybody talks about the weather, but nobody does anything about it. - Charles Dudley Warner

As a scientist who models plant diseases, I use a lot of weather data. Often this data is not available for areas of interest. Previously, I worked with the International Rice Research Institute (IRRI) and often the countries I was working with did not have weather data available or I was working on a large area covering several countries and needed a single source of data to work from. Other scientists who work with crop biophysical models to model crop yields also have similar weather data needs and may experience similar issues with data availability.

The United States National Oceanic and Atmospheric Administration’s (NOAA) National Centers for Environmental Information (NCEI 1) provides several sources of data, one of which is the Global Surface Summary of the Day (GSOD) data. The data are attractive because they are daily time-step and ground or buoy station based, freely available, and the data span several years, 1929 to current, with data from 1973 to current being the most complete. For more information on the GSOD data, please see the description of the data provided by NCEI, https://www7.ncdc.noaa.gov/CDO/GSOD_DESC.txt.

While the GSOD data are a valuable source of weather data with global coverage. The data files can be cumbersome and difficult to work with for research purposes. So instead of just talking about it, I did something about it. The GSODR package aims to make it easy to find, transfer and format the data you need for use in analysis. The package provides four main functions for facilitating this:

get_GSOD()- the main function that will query and transfer files from the FTP server, reformat them and return a data.frame in R or save a file to diskreformat_GSOD()- the workhorse, this function takes individual station files on the local disk and reformats them returning a data.frame in Rnearest_stations()- this function returns a data frame containing a list of stations and their metadata that fall within the given radius of a point specified by the userget_station_list()- this function retrieves the most up-to-date list of stations and corresponding metadata

When reformatting data either with get_GSOD() or reformat_GSOD(), all units are converted to International System of Units (SI), e.g., inches to millimetres and Fahrenheit to Celsius. File output can be saved as a Comma Separated Value (CSV) file or in a spatial GeoPackage (GPKG) file, implemented by most major GIS software products, summarising each year by station, which also includes vapour pressure (ea and es) and relative humidity variables calculated from existing data in GSOD by the package.

Documentation is provided for GSODR using pkgdown, which you can find on the GSODR website, https://docs.ropensci.org/GSODR// or of course in the usual R vignettes and help files.

How Can You Use GSODR?

How Can You Use GSODR?

Recently a colleague contacted me asking if I knew of or had weather data for a time period covering 1960 to 2015 for selected provinces in the Philippines where the International Rice Research Institute (IRRI) has conducted surveys. The IRRI survey loop in Central Luzon is a study that aims to monitor the changes in rice farming in the major rice producing area of the Philippines, the Central Luzon region, which is called the “rice bowl of the Philippines”. In this survey data have been collected several times since the 1960s, see the Farm Household Survey Database webpage for the data collected data. Using the GSODR package I was able to retrieve weather data from stations within a 100km radius of the centre of the provinces included in the survey and provide my colleague with a CSV file of weather data from ground-based weather stations.

As an example of how we can use GSODR, I will demonstrate the following:

-

retrieving a spatial object of provincial level data;

-

sub-setting this data for the provinces of interest;

-

merging the polygons into one object;

-

finding the centroid of this resulting polygon;

-

using the centroid of the polygon to find stations within 100km of this point;

-

determining which stations provide data for the specified time-period, 1960-2015; and

-

downloading the station files and creating a single CSV file of the data for analysis.

Retrieve PHL Provincial Data and Select Loop Provinces

Retrieve PHL Provincial Data and Select Loop Provinces

As a first step, we’ll use the raster package to retrieve data from GADM that will provide the provincial spatial data for the survey area. We will then use this to find the centroid of the area of interest, which will be used to find the nearest stations. Using raster::getData() fetch level 0 (national) and 1 (provincial) data for the Philippines.

install.packages("raster")

library(raster)

RP0 <- raster::getData(country = "Philippines", level = 0)

RP1 <- raster::getData(country = "Philippines", level = 1)

Now we will select the provinces involved in the survey and make a new object called Central_Luzon from the provincial level data, RP1.

Central_Luzon <- RP1[RP1@data$NAME_1 == "Pampanga" |

RP1@data$NAME_1 == "Tarlac" |

RP1@data$NAME_1 == "Pangasinan" |

RP1@data$NAME_1 == "La Union" |

RP1@data$NAME_1 == "Nueva Ecija" |

RP1@data$NAME_1 == "Bulacan", ]

With a little help from an old acquaintance, Arnold Salvacion and Scott Chamberlain we can create a map inset showing where the Central Luzon Loop survey takes place.

First we’ll use gSimplify() from rgeos to simplify the map of the Philippines to make the map generation in the next few steps quicker.

RP0 <- rgeos::gSimplify(RP0, tol = 0.05)

library(ggplot2)

library(grid)

library(gridExtra)

CL_names <- data.frame(coordinates(Central_Luzon)) # get center coordinates of provinces in Central Luzon

CL_names$label <- Central_Luzon@data$NAME_1

# Main Map

p1 <- ggplot() +

geom_polygon(data = Central_Luzon, aes(x = long, y = lat, group = group),

colour = "grey10", fill = "#fff7bc") +

geom_text(data = CL_names, aes(x = X1, y = X2, label = label),

size = 3, colour = "grey20") +

theme(axis.text.y = element_text(angle = 90, hjust = 0.5)) +

ggtitle("Central Luzon Provinces Surveyed") +

theme_bw() +

xlab("Longitude") +

ylab("Latitude") +

coord_map()

## Regions defined for each Polygons

# Inset

p2 <- ggplot() +

geom_polygon(data = RP0, aes(long, lat, group = group),

colour = "grey10",

fill = "#fff7bc") +

coord_equal() +

theme_bw() +

labs(x = NULL, y = NULL) +

geom_rect(aes(xmin = extent(Central_Luzon)[1],

xmax = extent(Central_Luzon)[2],

ymin = extent(Central_Luzon)[3],

ymax = extent(Central_Luzon)[4]),

alpha = 0,

colour = "red",

size = 1,

linetype = 1) +

theme(axis.text.x = element_blank(),

axis.text.y = element_blank(),

axis.ticks = element_blank(),

axis.title.x = element_blank(),

axis.title.y = element_blank(),

plot.margin = unit(c(0, 0, 0 ,0), "mm"))

grid.newpage()

v1 <- viewport(width = 1, height = 1, x = 0.5, y = 0.5) # plot area for the main map

v2 <- viewport(width = 0.28, height = 0.28, x = 0.67, y = 0.78) # plot area for the inset map

print(p1, vp = v1)

print(p2, vp = v2)

Dissolve Polygons and Find Centroid of Loop Survey Area

Dissolve Polygons and Find Centroid of Loop Survey Area

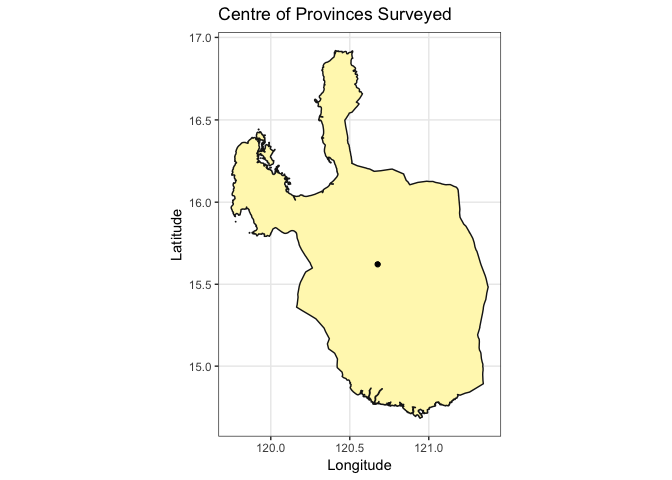

Now that we have the provincial data that we want, we will dissolve the polygons that represent the individual provinces in Central Luzon and find the centroid of all of them, which we will use as the central point for querying stations from the GSOD data set.

Central_Luzon <- rgeos::gUnaryUnion(Central_Luzon)

centroid <- rgeos::gCentroid(Central_Luzon)

ggplot() +

geom_polygon(data = Central_Luzon, aes(x = long, y = lat, group = group),

colour = "grey10", fill = "#fff7bc") +

geom_point(aes(x = centroid@coords[1], y = centroid@coords[2])) +

theme(axis.text.y = element_text(angle = 90, hjust = 0.5)) +

ggtitle("Centre of Provinces Surveyed") +

theme_bw() +

xlab("Longitude") +

ylab("Latitude") +

coord_map()

Next, make a list of stations that are within this area. First we need to fetch the station medadata, “isd-history.csv” from the FTP server and then check which stations fall within a 100km radius of the centre of the provinces we’re interested in.

library(GSODR)

library(readr)

# Fetch station list from NCEI

station_meta <- read_csv(

"ftp://ftp.ncdc.noaa.gov/pub/data/noaa/isd-history.csv",

col_types = "ccccccddddd",

col_names = c("USAF", "WBAN", "STN_NAME", "CTRY", "STATE", "CALL", "LAT",

"LON", "ELEV_M", "BEGIN", "END"), skip = 1)

station_meta$STNID <- as.character(paste(station_meta$USAF,

station_meta$WBAN,

sep = "-"))

loop_stations <- nearest_stations(LAT = centroid@coords[2],

LON = centroid@coords[1],

distance = 100)

loop_stations <- station_meta[station_meta$STNID %in% loop_stations, ]

loop_stations <- loop_stations[loop_stations$BEGIN <= 19591231 &

loop_stations$END >= 20151231, ]

print(loop_stations[, c(1:2, 3, 7:12)])

## # A tibble: 9 × 9

## USAF WBAN STN_NAME LAT LON ELEV_M BEGIN END

## <chr> <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 983240 99999 IBA/LUZON ISLAND 15.333 119.967 5.0 19490104 20170315

## 2 983250 99999 DAGUPAN 16.083 120.350 2.0 19450119 20170315

## 3 983270 99999 CLARK INTL 15.186 120.560 147.5 19450214 20170315

## 4 983280 99999 BAGUIO 16.375 120.620 1295.7 19490101 20170315

## 5 984260 41231 SUBIC BAY WEATHER 14.800 120.267 19.0 19450209 20170315

## 6 984290 99999 NINOY AQUINO INTL 14.509 121.020 22.9 19450331 20170315

## 7 984300 99999 SCIENCE GARDEN 14.650 121.050 46.0 19450228 20170315

## 8 984320 99999 AMBULONG 14.083 121.050 11.0 19490205 20170315

## 9 984330 99999 TANAY 14.583 121.367 651.0 19490101 20170315

## # ... with 1 more variables: STNID <chr>

These are all of the stations that are availble within 100km of the centroid of this area and the years for which data are available.

Using

Using get_GSOD() to Fetch the Requested Station Files

This example shows how you could construct a query using the get_GSOD() function. Be aware that it may result in incomplete data and error from the server if it stops responding. We’ve done our best to make GSODR handle these errors, but if it does this, see the following option for using the reformat_GSOD() function.

PHL <- get_GSOD(station =

eval(parse(text = loop_stations[, 12])), years = 1960:2015)

##

## Checking requested station file for availability on server.

##

## Downloading individual station files.

## Starting data file processing

Another Option, Using

Another Option, Using reformat_GSOD()

GSODR provides a function for dealing with local files that have been transferred from the server already as well, reformat_GSOD(). If the previous example with get_GSOD() does not work, this is a good alternative that takes a bit more intervention but gives the same results.

Using your FTP client (e.g., FileZilla) log into the NCEI FTP server, ftp.ncdc.noaa.gov and navigate to /pub/data/gsod/. Manually downloading the files for each station listed above from 1960 to 2015 is possible, but tedious. An easier solution is to simply download the annual files found in each yearly directory, “gsod-YYYY.tar” and untar them locally and then use R to list the available files and select only the files for the stations of interest. Lastly, write the data to disk as a CSV file for saving and later use.

years <- 1960:2015

loop_stations <- eval(parse(text = loop_stations[, 12]))

# create file list

loop_stations <- do.call(

paste0, c(expand.grid(loop_stations, "-", years, ".op.gz"))

)

local_files <- list.files(path = "./GSOD", full.names = TRUE, recursive = TRUE)

local_files <- local_files[basename(local_files) %in% loop_stations]

loop_data <- reformat_GSOD(file_list = local_files)

readr::write_csv(loop_data, file = "Loop_Survey_Weather_1960-2015")

The Data Content and Format

The Data Content and Format

The final data returned by either of these methods will be data that include the following elements for the years of 1960-2015

-

STNID - Station number (WMO/DATSAV3 number) for the location;

-

WBAN - number where applicable–this is the historical “Weather Bureau Air Force Navy” number - with WBAN being the acronym;

-

STN_NAME - Unique text identifier;

-

CTRY - Country in which the station is located;

-

LAT - Latitude. Station dropped in cases where values are < -90 or > 90 degrees or Lat = 0 and Lon = 0;

-

LON - Longitude. Station dropped in cases where values are < -180 or > 180 degrees or Lat = 0 and Lon = 0;

-

ELEV_M - Elevation in metres;

-

ELEV_M_SRTM_90m - Elevation in metres corrected for possible errors, derived from the CGIAR-CSI SRTM 90m database (Jarvis et al. 2008);

-

YEARMODA - Date in YYYY-mm-dd format;

-

YEAR - The year (YYYY);

-

MONTH - The month (mm);

-

DAY - The day (dd);

-

YDAY - Sequential day of year (not in original GSOD);

-

TEMP - Mean daily temperature converted to degrees C to tenths. Missing = NA;

-

TEMP_CNT - Number of observations used in calculating mean daily temperature;

-

DEWP - Mean daily dew point converted to degrees C to tenths. Missing = NA;

-

DEWP_CNT - Number of observations used in calculating mean daily dew point;

-

SLP - Mean sea level pressure in millibars to tenths. Missing = NA;

-

SLP_CNT - Number of observations used in calculating mean sea level pressure;

-

STP - Mean station pressure for the day in millibars to tenths. Missing = NA;

-

STP_CNT - Number of observations used in calculating mean station pressure;

-

VISIB - Mean visibility for the day converted to kilometres to tenths Missing = NA;

-

VISIB_CNT - Number of observations used in calculating mean daily visibility;

-

WDSP - Mean daily wind speed value converted to metres/second to tenths Missing = NA;

-

WDSP_CNT - Number of observations used in calculating mean daily wind speed;

-

MXSPD - Maximum sustained wind speed reported for the day converted to metres/second to tenths. Missing = NA;

-

GUST - Maximum wind gust reported for the day converted to metres/second to tenths. Missing = NA;

-

MAX - Maximum temperature reported during the day converted to Celsius to tenths–time of max temp report varies by country and region, so this will sometimes not be the max for the calendar day. Missing = NA;

-

MAX_FLAG - Blank indicates max temp was taken from the explicit max temp report and not from the ‘hourly’ data. An “*” indicates max temp was derived from the hourly data (i.e., highest hourly or synoptic-reported temperature);

-

MIN - Minimum temperature reported during the day converted to Celsius to tenths–time of min temp report varies by country and region, so this will sometimes not be the max for the calendar day. Missing = NA;

-

MIN_FLAG - Blank indicates max temp was taken from the explicit min temp report and not from the ‘hourly’ data. An “*” indicates min temp was derived from the hourly data (i.e., highest hourly or synoptic-reported temperature);

-

PRCP - Total precipitation (rain and/or melted snow) reported during the day converted to millimetres to hundredths; will usually not end with the midnight observation, i.e., may include latter part of previous day. A value of “.00” indicates no measurable precipitation (includes a trace). Missing = NA; Note: Many stations do not report ‘0’ on days with no precipitation– therefore, ‘NA’ will often appear on these days. For example, a station may only report a 6-hour amount for the period during which rain fell. See FLAGS_PRCP column for source of data;

-

PRCP_FLAG -

-

A = 1 report of 6-hour precipitation amount;

-

B = Summation of 2 reports of 6-hour precipitation amount;

-

C = Summation of 3 reports of 6-hour precipitation amount;

-

D = Summation of 4 reports of 6-hour precipitation amount;

-

E = 1 report of 12-hour precipitation amount;

-

F = Summation of 2 reports of 12-hour precipitation amount;

-

G = 1 report of 24-hour precipitation amount;

-

H = Station reported ‘0’ as the amount for the day (e.g., from 6-hour reports), but also reported at least one occurrence of precipitation in hourly observations–this could indicate a rrace occurred, but should be considered as incomplete data for the day;

-

I = Station did not report any precipitation data for the day and did not report any occurrences of precipitation in its hourly observations–it’s still possible that precipitation occurred but was not reported;

-

-

SNDP - Snow depth in millimetres to tenths. Missing = NA;

-

I_FOG - Indicator for fog, (1 = yes, 0 = no/not reported) for the occurrence during the day;

-

I_RAIN_DRIZZLE - Indicator for rain or drizzle, (1 = yes, 0 = no/not reported) for the occurrence during the day;

-

I_SNOW_ICE - Indicator for snow or ice pellets, (1 = yes, 0 = no/not reported) for the occurrence during the day;

-

I_HAIL - Indicator for hail, (1 = yes, 0 = no/not reported) for the occurrence during the day;

-

I_THUNDER - Indicator for thunder, (1 = yes, 0 = no/not reported) for the occurrence during the day;

-

I_TORNADO_FUNNEL - Indicator for tornado or funnel cloud, (1 = yes, 0 = no/not reported) for the occurrence during the day;

-

ea - Mean daily actual vapour pressure;

-

es - Mean daily saturation vapour pressure;

-

RH - Mean daily relative humidity.

Here’s what the data look like.

## WBAN STNID STN_NAME CTRY STATE CALL LAT LON

## 1 99999 983240-99999 IBA/LUZON ISLAND RP <NA> RPUI 15.333 119.967

## 2 99999 983240-99999 IBA/LUZON ISLAND RP <NA> RPUI 15.333 119.967

## 3 99999 983240-99999 IBA/LUZON ISLAND RP <NA> RPUI 15.333 119.967

## 4 99999 983240-99999 IBA/LUZON ISLAND RP <NA> RPUI 15.333 119.967

## 5 99999 983240-99999 IBA/LUZON ISLAND RP <NA> RPUI 15.333 119.967

## 6 99999 983240-99999 IBA/LUZON ISLAND RP <NA> RPUI 15.333 119.967

## ELEV_M ELEV_M_SRTM_90m BEGIN END YEARMODA YEAR MONTH DAY YDAY

## 1 5 9 19490104 20170315 19730101 1973 01 01 1

## 2 5 9 19490104 20170315 19730102 1973 01 02 2

## 3 5 9 19490104 20170315 19730103 1973 01 03 3

## 4 5 9 19490104 20170315 19730104 1973 01 04 4

## 5 5 9 19490104 20170315 19730105 1973 01 05 5

## 6 5 9 19490104 20170315 19730106 1973 01 06 6

## TEMP TEMP_CNT DEWP DEWP_CNT SLP SLP_CNT STP STP_CNT VISIB VISIB_CNT

## 1 26.8 7 23.8 7 1014.1 7 NA 0 34.3 7

## 2 26.6 5 23.2 5 1013.8 5 NA 0 34.0 5

## 3 25.8 6 23.2 6 1013.5 6 NA 0 34.9 6

## 4 28.4 5 25.0 5 1014.5 5 NA 0 38.0 5

## 5 25.8 4 23.5 4 1014.8 4 NA 0 NA 0

## 6 25.0 8 22.9 8 1014.8 8 NA 0 35.6 8

## WDSP WDSP_CNT MXSPD GUST MAX MAX_FLAG MIN MIN_FLAG PRCP PRCP_FLAG SNDP

## 1 0.4 7 3.0 NA 31 * 24 * 0 I NA

## 2 0.9 5 4.1 NA 33 * 19 * 0 I NA

## 3 0.8 6 4.1 NA 30 * 21 * 0 I NA

## 4 1.0 5 5.1 NA 32 * 23 * 0 I NA

## 5 0.2 4 1.5 NA 30 * 22 * 0 I NA

## 6 0.4 8 2.5 NA 31 * 19 * 0 I NA

## I_FOG I_RAIN_DRIZZLE I_SNOW_ICE I_HAIL I_THUNDER I_TORNADO_FUNNEL EA

## 1 0 0 0 0 0 0 2.9

## 2 0 0 0 0 0 0 2.8

## 3 0 0 0 0 0 0 2.8

## 4 0 0 0 0 0 0 3.2

## 5 0 0 0 0 0 0 2.9

## 6 0 0 0 0 0 0 2.8

## ES RH

## 1 3.5 82.9

## 2 3.5 80.0

## 3 3.3 84.8

## 4 3.9 82.1

## 5 3.3 87.9

## 6 3.2 87.5

Using the GSODR package and R I was able to easily retrieve and provide weather data for the years requested that cover the area of interest for this survey and create a CSV file of the data for use with other software for the analysis.

Using GSOD Data With Climate Data From GSODRdata

Using GSOD Data With Climate Data From GSODRdata

If you want to use climate and ecological data with weather data, we’ve also provided a supplementary data package to go with GSODR, GSODRdata, which provides climate data from five sources in six data frames:

-

CHELSA - Climatic surfaces at 1 km resolution is based on a quasi-mechanistic statistical downscaling of the ERA interim global circulation model (Karger et al. 2016). ESA’s CCI-LC cloud probability monthly averages are based on the MODIS snow products (MOD10A2).

-

CRU_CL_2 - The CRU CL 2.0 data-set (New et al. 2002) comprises monthly grids of observed mean climate from 1961-1990, and covering the global land surface at a 10 minute spatial resolution. There are eight climatic variables available, and also the elevations on the grid: diurnal temperature range, precipitation, mean temperature, wet-day frequency, frost-day frequency, relative humidity, sunshine, and wind-speed. In addition minimum and maximum temperature may be deduced from mean temperature and diurnal temperature range (see FAQ).

-

ESACCI - ESA’s CCI-LC snow cover probability https://maps.elie.ucl.ac.be/CCI/viewer/index.php

-

MODCF - Remotely sensed high-resolution global cloud dynamics for predicting ecosystem and biodiversity distributions (Wilson et al. 2016) provides new near-global, fine-grain (≈1km) monthly cloud frequencies from 15 years of twice-daily MODIS satellite images.

-

WorldClim_Bio - WorldClim Global Climate Data - Free climate data for ecological modeling and GIS (Hijmans et al. 2004) provides freely available bioclimatic variables. These data are freely available for download from https://www.worldclim.org/version1.

-

WorldClim_Clim - WorldClim Global Climate Data - Free climate data for ecological modeling and GIS (Hijmans et al. 2004) provides freely available, average monthly climate data. Current conditions (interpolations of observed data, representative of 1960-1990) are freely available for download from https://www.worldclim.org/version1.

Due to the size of the package, >9Mb, it is only available from GitHub. However, these data frames provide climate and ecological data that corresponds to the GSOD station locations, making it easy to find and work with weather and climate data at the same time. If you’re interested you can find some further examples in the GSODR vignettes.

Conclusions

Conclusions

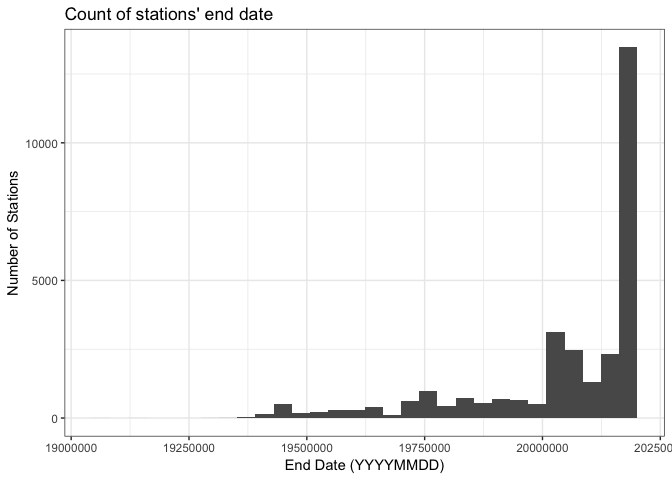

The GSOD data have a wide range of applications and GSODR makes this data more accessible to scientists that need a global weather data set. Using GSODR means that you can efficiently query for a set of years, for specific stations or areas within a given radius. The GSOD data are not perfect, there are many gaps prior to 1973, but in the more recent years the data became more reliable and more stations are being added.

ggplot(station_meta, aes(x = END)) +

geom_histogram() +

xlab("End Date (YYYYMMDD)") +

ylab("Number of Stations") +

ggtitle("Count of stations' end date") +

theme_bw()

How could you use GSODR?

How could you use GSODR?

Let us know how you use GSODR in your work. If you find an issue, please file an issue and we’ll work to get it corrected as quickly as possible. Also, if you think that you have an idea that might make GSODR better let us know that too.

Acknowledgments

Acknowledgments

We’re grateful to Jeff Hanson and Dillon Niederhut who took the time to review GSODR as a part of the rOpenSci onboarding process and the related paper published in the Journal of Open Source Software (Sparks et al., 2017). Their suggestions greatly improved this package. Also, thanks to Scott Chamberlain for his editorial comments on this blog post, including spelling corrections to his name.

References

References

Adam H Sparks, Tomislav Hengl and Andrew Nelson (2017). GSODR: Global Summary Daily Weather Data in R. The Journal of Open Source Software, 2(10). DOI: 10.21105/joss.00177. URL: https://doi.org/10.21105/joss.00177

Notes

Notes

-

Formerly the National Climatic Data Center (NCDC) ↩︎